Step 5- Responsible AI Governance

Building Trust, Securimg the Future

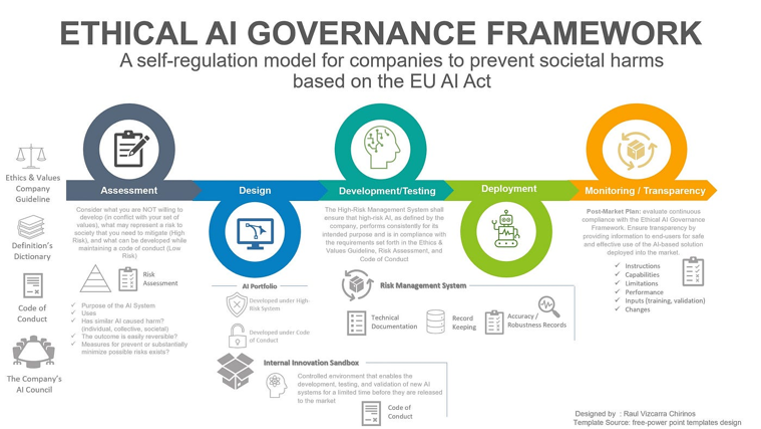

AI is no longer a ‘nice-to-have’. It’s shaping strategic decision-making, optimising operations, and influencing human lives. But without a clear governance framework, AI introduces risk—ethical, operational, legal and reputational. That’s why Step 5 focuses on embedding responsibility, oversight, and control into your AI strategy—before deployment even begins.

Why This Step Matters

Without governance, you don’t scale AI—you scale risk.

Whether you're automating processes, enhancing decision-making, or deploying predictive models, you need:

✅Clarity on who owns AI decisions

✅Standards to ensure fairness and transparency

✅Controls to prevent drift, bias, or breaches

AI is powerful. But it must also be accountable.

What's included in Step 5 ?

✅ Definition of the Responsible AI (RAI) Framework

- Grounded in ethics, fairness, transparency, and accountability

- Tailored to your organisation’s industry, structure and risk profile Building

- Aligns with emerging UK, EU & global standards

✅ AI Evaluation Tool

- Used to evaluate AI initiatives before deployment

- Covers bias testing, explainability, privacy, alignment with business and legal policies

✅ Governance Model Development

- Establish governance roles (data ethics officers, AI review boards, etc.)

- Create policy guidelines for procurement, deployment and model lifecycle management

- Define escalation paths and responsibility ownership

✅ AI Security Framework

- Identify and address security vulnerabilities

- Implement model auditability, traceability, and fail-safes

- Align AI risk with enterprise cyber and risk strategies

✅ AI Centre of Excellence (CoE) Blueprint

- Define a hub-and-spoke model for AI knowledge, ownership and innovation

- Centralise capability building and reuse of tools, processes, frameworks

✅ Workshop Driven Process

- Co-designed with stakeholders across IT, compliance, strategy, and business units

- Facilitated by expert consultants with sector experience

- Outcome: Agreed RAI Framework & Governance Model ready for implementation

The Outcome

✅ A clear, pragmatic and board-ready Responsible AI Framework

✅ Improved trust and adoption across your organisation

✅ Greater alignment with legal, regulatory and public expectations

✅ A foundation for sustainable, ethical AI deployment at scale

Ready to Lead with Integrity?

Governance isn’t a blocker—it’s a catalyst for responsible innovation. Let’s work together to embed AI that’s not just effective, but ethical, secure and scalable.

Book a governance discovery session now. Take a confident, compliant step forward with AI.

We need your consent to load the translations

We use a third-party service to translate the website content that may collect data about your activity. Please review the details in the privacy policy and accept the service to view the translations.